ssh -X username@serverStart working on an HPC

Getting started

Next, let’s go over some basic commands that are useful when working on an HPC.

Generally, you schedule jobs on an HPC for them to run effectively. The scheduler used on Crunchomics is called slurm and allows you to:

- allocate resources on compute nodes

- helps you start, run, and keep track of work on the assigned node

- handles conflicts for resources by keeping track of a waiting list for pending work

Since you share resources with other people when using an HPC:

Choose the resources you use wisely and allow other users to also run some work. For big projects on the Uva Crunchomics HPC you are encouraged to contact Wim de Leeuw w.c.deleeuw@uva.nl beforehand.

Connecting to a sever

SSH (Secure Shell) is a network protocol that enables secure remote connections between two systems. The general ssh command that you can use to login into any HPC looks as follows:

Options:

-Xoption enables untrusted X11 forwarding in SSH. Untrusted means = your local client sends a command to the remote machine and receives the graphical output. Put simply this option enables us to run graphical applications on a remote server and this for example allows us to view a pdf.

If you have access to and want to connect to Crunchomics you would edit the command above to look like this:

ssh -X uvanetid@omics-h0.science.uva.nlIf you want to log into Crunchomics from outside of UvA you need to be connected to the VPN. If you have not set that up and/or have trouble doing so, please contact ICT.

Setup for first time Crunchomics users

If you have access to Crunchomics and have not used Crunchomics before, then you want to first run a small Bash script that:

- Allows you to use system-wide installed software, by adding

/zfs/omics/software/binto your path. This basically means that bash knows that there is an extra folder in which to look for any software that is already installed - Sets up a python3 environment and some useful python packages

- Have a link for your 500 GB personal directory in your home directory

To set this up, run the following command in the cli:

/zfs/omics/software/script/omics_install_scriptSlurm basics

Get information about the cluster

Let’s start by getting some basic information about what nodes are avaiable on a cluster by typing the following command into the cli:

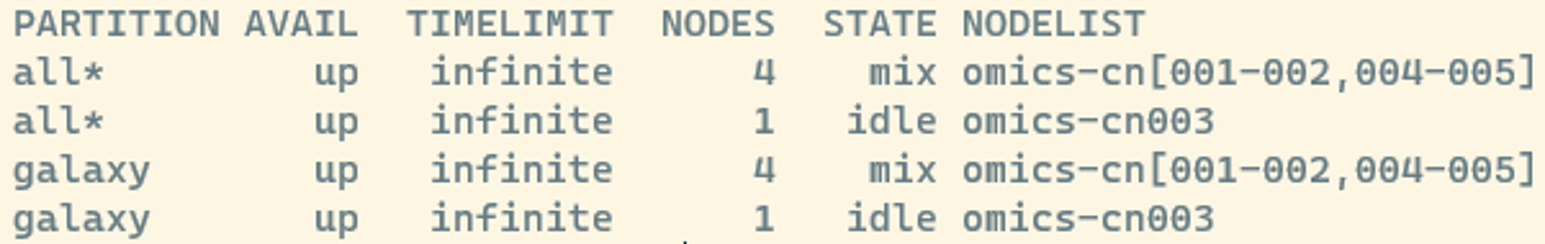

sinfoWe will see something like this:

Here, you see information about the:

- partition: the queues that are available

- state: if a node is busy or not

- mix : consumable resources partially allocated

- idle : available to requests consumable resources

- drain : unavailable for use per system administrator request

- alloc : consumable resources fully allocated

- down : unavailable for use.

- Nodes: The number of nodes

- NodeList: the names of the nodes omics-cn001 to omics-cn005

View info about jobs in the queue

The following commands gives us some information about how busy the HPC is:

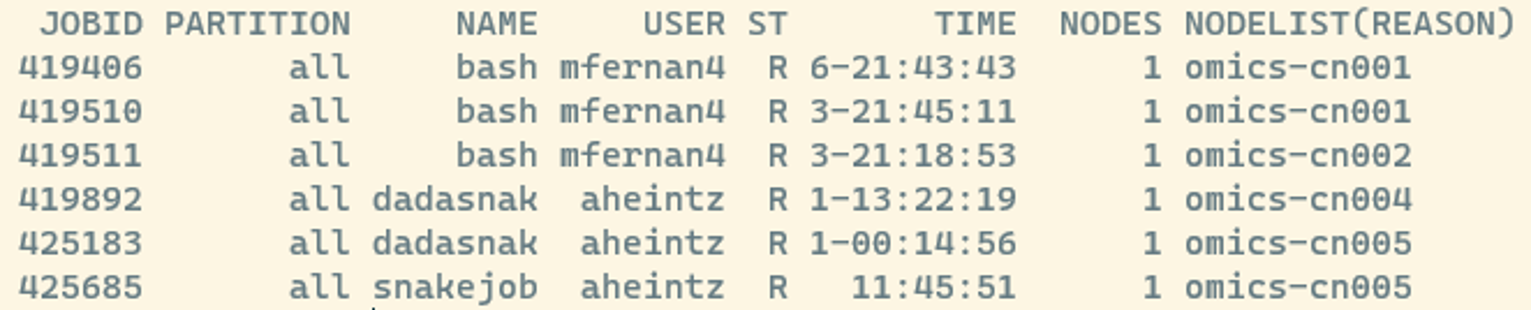

squeueAfter running this, you can see all jobs scheduled on the HPC:

- JOBID: every job gets a number and you can manipulate jobs via this number

- ST: Job state codes that describe the current state of the job. The full list of abbreviations can be found here

Unsure about the resources each node has?

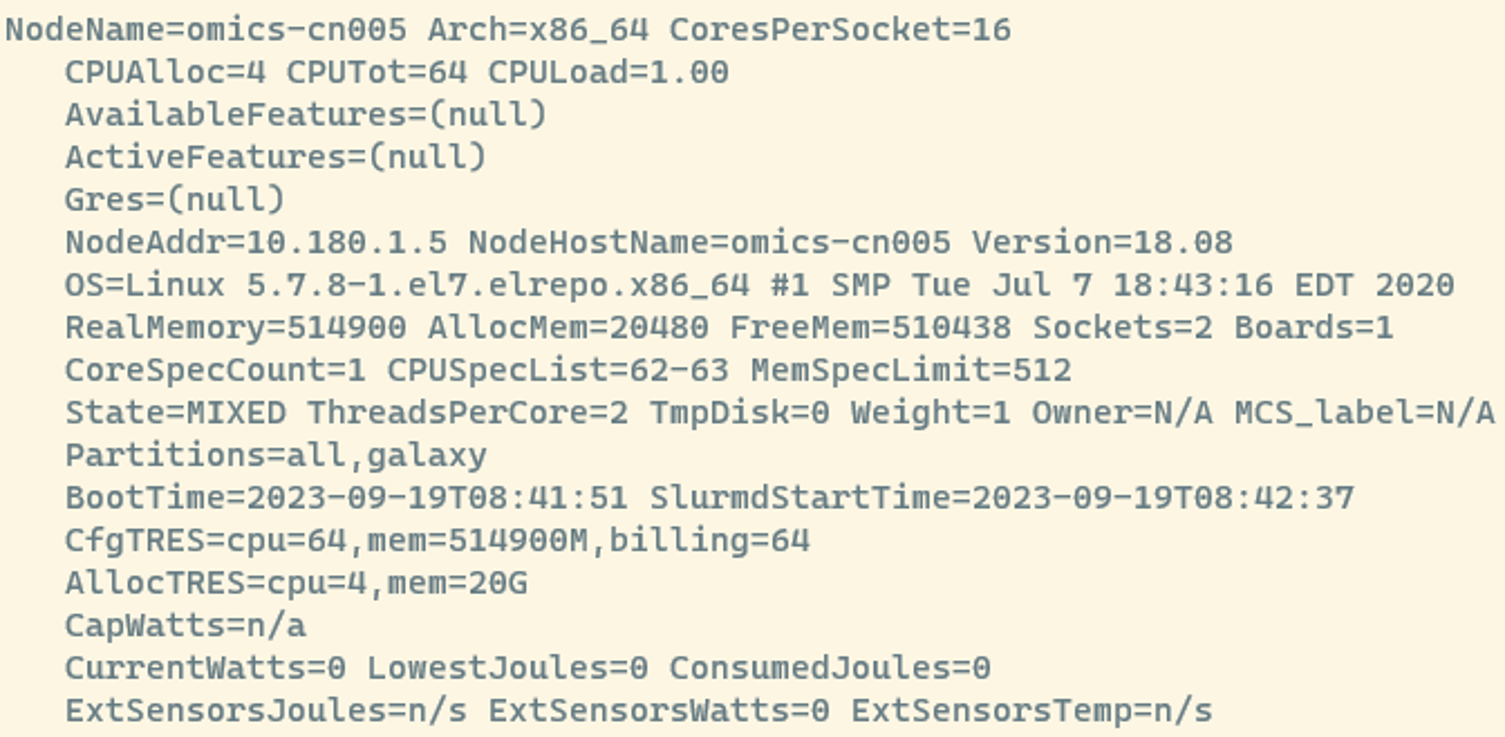

If you are unsure about how many resources a compute node has available you can get more information using the following command:

scontrol show node

This is a lot of information but here are some useful things to look at:

- NodeName=omics-cn005: This is the name of the node you’re inspecting. Each node has a unique name for identification.

- CPUAlloc=4 CPUTot=64 CPULoad=1.00:

- CPUAlloc=4: Currently, 4 CPUs are allocated or actively being used.

- CPUTot=64: The total number of CPUs available on this node is 64.

- CPULoad=1.00: The CPU load gives you an idea of how busy the CPUs are. A load of 1.00 means they’re fully utilized.

- RealMemory=514900 AllocMem=20480 FreeMem=510438:

- RealMemory=514900: The total physical memory (RAM) on this node is 514900 megabytes or 512 Gb.

- AllocMem=20480: Currently, 20 gigabytes of memory are allocated or in use.

- FreeMem=510438: There are 510438 megabytes of free memory available.

- AllocTRES=cpu=4,mem=20G:

- AllocTRES shows the resources currently allocated. 4 CPUs and 20 gigabytes of memory are currently in use.

If unsure about what resources are available:

- Check CPUTot for the total CPUs on the node

- Look at CPUAlloc for the currently allocated CPUs.

- Examine RealMemory for total available memory and FreeMem for the unused portion.

Get information about running jobs

To get a better feel about how many resources a job used we can use sacct, which displays accounting data for all jobs and job steps in the Slurm job accounting log or Slurm database.

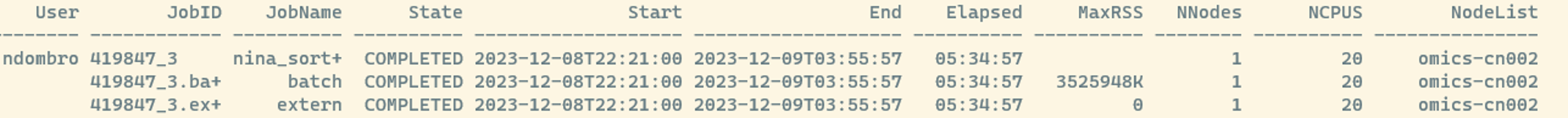

In the command below 419847 is the job ID of a currently running or finished job and -with --format we create a custom report with some useful information:

sacct -j 419847 --format=User,JobID,Jobname,state,start,end,elapsed,MaxRss,nnodes,ncpus,nodelist

This tells us:

- In the example the first row corresponds to the job itself and the other rows are job steps. If you run things in parallel you will get several additional rows and can see how many resources each job used

- How long the job run (or each job for parallel jobs)

- MaxRSS: the highest amount of memory your job used while it was running in kilobytes. In our example we see the job used 3525948 kilobytes or 3.5 Gigabytes.

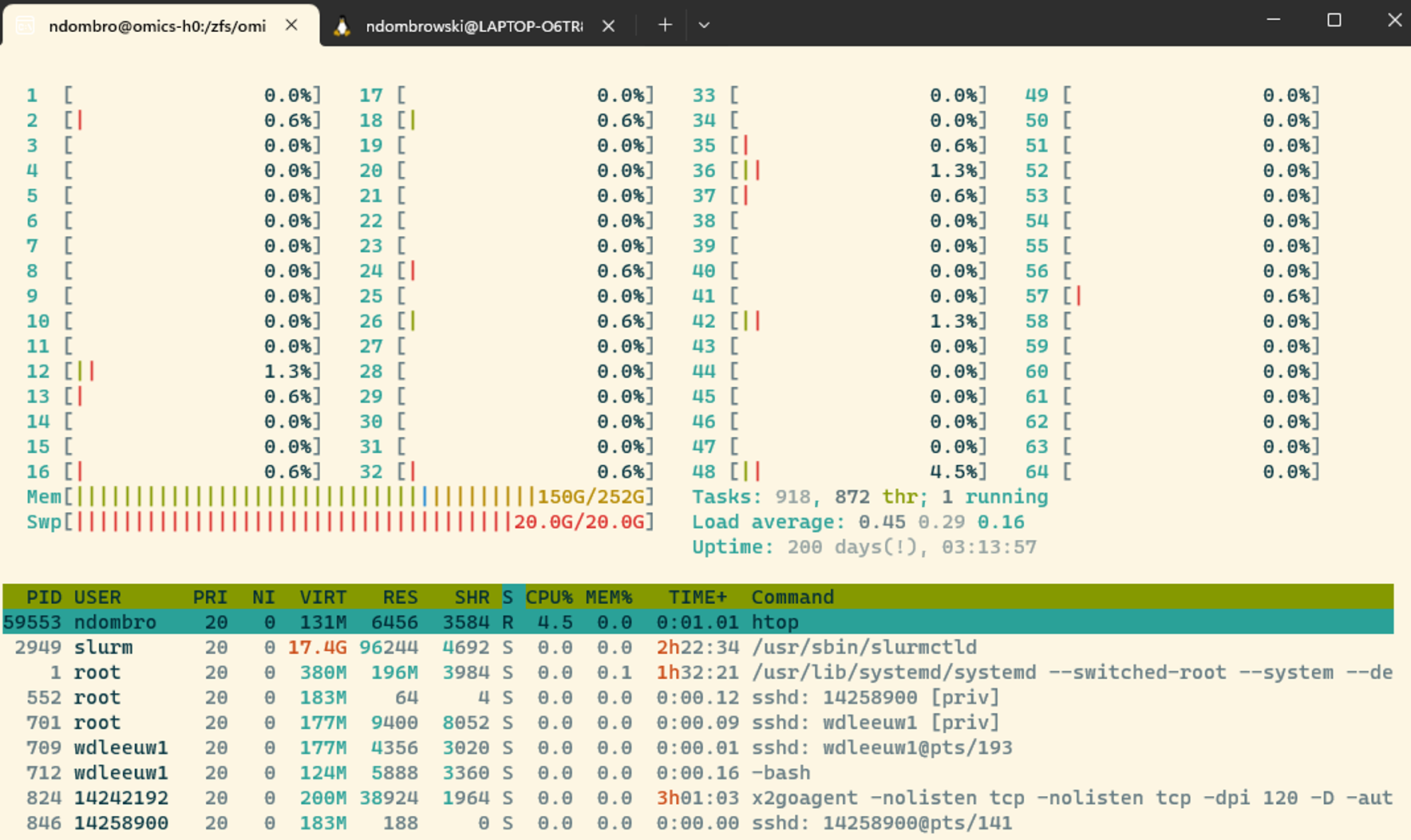

htop: How busy is a node

Htop gives us an overview about how busy a node is, typing htop will give a screen that looks something like this:

The numbers from 1-64 are the 64 CPUs available on the Crunchomics login ndode and the fuller the bar is, the more of a single CPU is currently in use. This is also summarized under tasks. Another important line is the memory listing how much in total of the avail. memory is in use.

You exit the htop window by typing q.

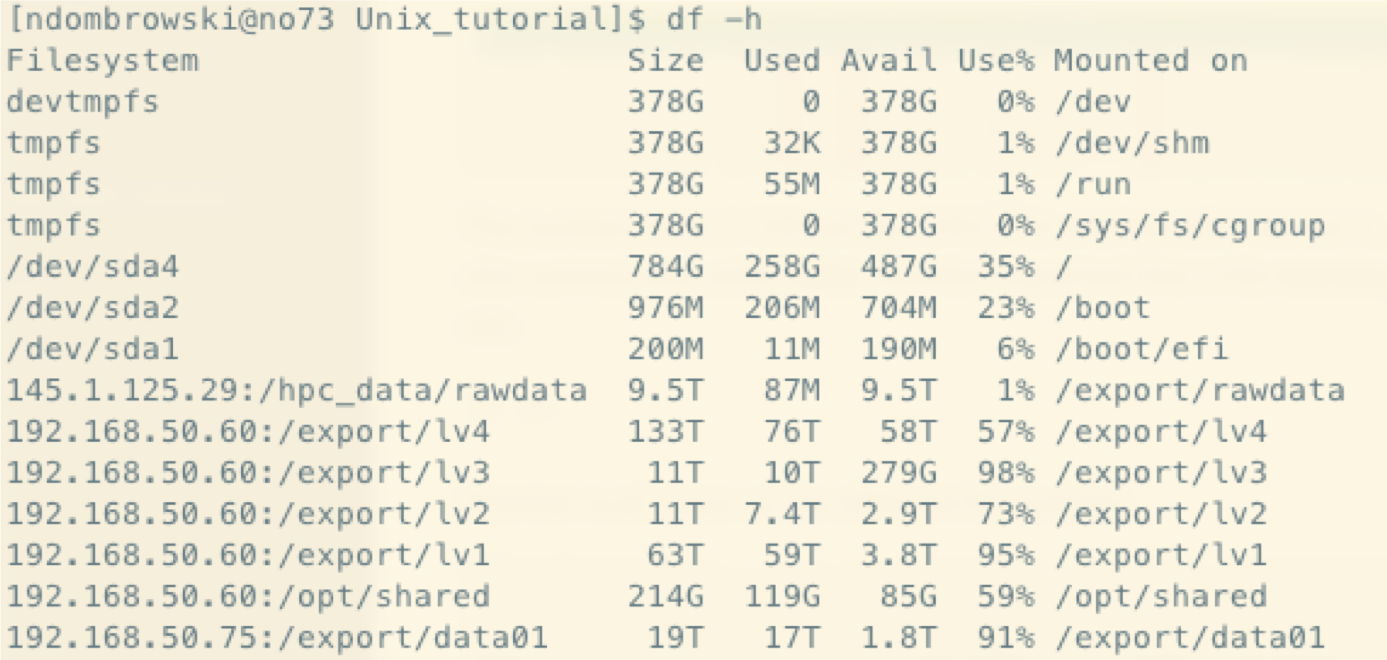

df: Disk space usage

df monitors the available space on the different file systems. There might be a lot of different files listed but it quickly allows you to look for the folders you have access to and check how much space you have available.

du: Checking the size of a folder

du monitors how much space specific folders take up. In the example below we look at all the folders in folder called personal. If your folder is large and has many individual files, give this a bit of time to run.

du -sh personal/Transferring files

scp: Transferring data from/to a server

scp stands for Secure Copy Protocol and allows us to securely copy files and directories between remote hosts. When transferring data the transfer is prepared from the terminal of your local computer and not from the HPCs login node.

The basic syntax is:

scp [options] SOURCE DESTINATION

Let’s assume we have a file, file.txt, on our personal computer and want to move it to an HPC. To be able to do this, we run our commands from the terminal of the local machine, not on the HPC itself:

scp file.txt username@server:/path_to_target_folderFor the case of us having a text file on the server and want to move to our personal computer we can do this as follows. Again, we run this command from a terminal on your computer, not while being logged in the HPC:

scp username@server:/path_to_target_folder/file.txt DesktopThere are graphical interace filetransfer systems that can make the job easier, i.e. FileZilla, that you can check up on.

rsync: Synchronize files and directories between two hosts or machines

While scp is handy for transferring files, rsync takes it a step further by efficiently synchronizing files and directories. This is particularly useful for large datasets (imagine having to move several terrabytes) and for updating files without transferring the entire content.

This can be useful for transferring large files from an HPC to your computer but also when transferring large files from one to another location on the same machine.

To copy a file from your local machine to an HPC using rsync, use the following command:

rsync -avz file.txt username@server:/path_to_target_folderUsed options:

-a: Archive mode, preserving permissions and other attributes.-v: Verbose, showing the files being copied.-z: Compress data during transfer, reducing the amount of data sent over the network.

Conversely, to copy a file from the server to your local machine, execute the following command:

rsync -avz username@server:/path_to_source_folder/file.txt DesktopFor more examples, check out this page.

Submitting a job

srun

srun is used when you want to run tasks interactively or have more control over the execution. You directly issue srun commands in the terminal, specifying the tasks to be executed and their resource requirements.

Use srun when:

- You want to run tasks interactively and need immediate feedback.

- You are testing or debugging your commands before incorporating them into a script.

- You need more control over the execution of tasks.

A simple example:

srun echo "Hello interactively"Assuming you want to run a more complex interactive task with srun it is good to specify the resources your job needs by adding flags, i.e.

srun --nodes=1 --ntasks=1 --cpus-per-task=1 --mem=1G echo "Hello interactively"--nodes=1: Specifies the number of nodes. In this case, it’s set to 1 and tells slurm that we want to use a full node. Only use this if you make use of all resources on that node, otherwise omit.--ntasks=1: Defines the number of tasks to run. Here, it’s set to 1--cpus-per-task=1: Specifies the number of CPUs per task. Adjust this based on the computational requirements of your task.--mem=1G: Sets the memory requirement for the task. Modify this based on your task’s memory needs.echo "Hello interactively: The actual command you want to run interactively.

Choosing the right amount of resources

When you’re just starting, deciding on the right resources to request for your computational job can be a bit challenging. The resource requirements can vary significantly based on the specific tool or workflow you are using. Here are some general guidelines to help you make informed choices:

- Use default settings: Begin by working with the default settings provided by the HPC cluster. These are often set to provide a balanced resource allocation for a wide range of tasks

- Check the software documentation: Consult the documentation of the software or tool you are using. Many tools provide recommendations for resource allocation based on the nature of the computation.

- Testing with Small Datasets: For initial testing and debugging, consider working with a smaller subset of your dataset. This allows for faster job turnaround times, helping you identify and resolve issues more efficiently.

- Monitor the resources usage:

- Use

sacctto check what resources a finished job has used. Look for columns like MaxRSS (maximum resident set size) to check if the amount of memory allocated (–mem) was appropriate. - For instance, with

--cpus-per-task=4 --mem=4G, you would expect to use a total of 16 GB of memory (4 CPUs * 4 GB). Verify this with sacct to ensure your job’s resource requirements align with its actual usage.

- Use

- Fine-Tuning Resource Requests: If you encounter performance issues or your jobs are not completing successfully, consider iteratively adjusting resource requests. This might involve increasing or decreasing the number of CPUs, memory allocation, or other relevant parameters.

sbatch

sbatch is your go-to command when you have a script (a batch script) that needs to be executed without direct user interaction.

Use sbatch when:

- You have long-running or resource-intensive tasks.

- You want to submit jobs that can run independently without your immediate supervision

- You want to submit multiple jobs at once

To run a job script, you:

- create a script that contains all the commands and configurations needed for your job

- use sbatch to submit this script to the Slurm scheduler, and it takes care of the rest.

To get started, assume we have created a script named first_batch_script.sh with the following content in which we want to run two commands after each other:

#!/bin/bash

#SBATCH --nodes=1

#SBATCH --ntasks=1

#SBATCH --cpus-per-task=2

#SBATCH --mem=2G

# Job Step 1

echo "Hello from your extended batch script"

# Job Step 2

echo "Today we are using slurm"The we can submit first_batch_script.sh with:

sbatch first_batch_script.shAfter running this, you will see that a new file is generated that will look something like this slurm-425707.out. When you submit a batch job using sbatch, Slurm redirects the standard output and standard error streams of your job to a file named in the format slurm-JOBID.out, where JOBID is the unique identifier assigned to your job.

This file is useful as it:

- Captures the output of our batch scripts and stores them in a file

- Can be used for debugging, since if something goes wrong with your job, examining the contents of this file can provide valuable insights into the issue. Error messages, warnings, or unexpected outputs are often recorded here.

arrays: running things in parallel

In bioinformatics its very common to run the same command on several input files. Instead of copy pasting the same command several times, one option is to write the command once and use a slurm array to run the command in parallel on multiple files.

Assume we have three samples and for each sample we have generated a file with sequencing data:

- sample1.fastq.gz

- sample2.fastq.gz

- sample3.fastq.gz

We might want to do some cleaning of the reads, for example remove adaptors from all three files. We start with making a file that list all samples we want to work with (i.e. using nano or cp from excel) called sample_list which might look like this:

sample1

sample2

sample3We then can write a sbatch script, i.e. arrays.sh, as follows:

#!/bin/bash

#SBATCH --job-name=our_job

#SBATCH --output=%A_%a.out

#SBATCH --error=%A_%a.err

#SBATCH --array=1-3%2

#SBATCH --cpus-per-task=20

#SBATCH --mem-per-cpu=5G

#SBATCH --mail-type=END,FAIL

#SBATCH --mail-user=user@uva.nl

#calculate the index of the current job within the batch

INDEX=$((SLURM_ARRAY_TASK_ID ))

#build array structure via ale file names

CURRENT_SAMPLE=$(cat sample_list | sort -V | sed -n "${INDEX}p")

echo "Now Job${INDEX} runs on ${CURRENT_SAMPLE}"

porechop --input ${CURRENT_SAMPLE}.fastq.gz \

--output outputfolder/${CURRENT_SAMPLE}_filtered.fastq.gz \

--threads 20 \

--discard_middleThe first section contains parameters for Slurm, the job scheduler.

--job-name:Specifies the name of the job.--outputand--error: Define the naming pattern for the standard output and error files.%Arepresents the job ID assigned by Slurm, and%arepresents the array task ID, which is the index of the current job within the array. After running the job, you will get files that might be named 123456_1.out or 123456_1.err, where 123456 is the job ID and 1 is the array task ID.--array: Sets up a job array, specifying the range (1-3) and stride (%2) for the array. It says that we want to run 3 jobs and two jobs should be run at the same time--cpus-per-task: Indicates the number of CPU cores each task in the array will use--mem-per-cpu: Specifies the memory requirement per CPU core.--mail-typeand--mail-user: Configure email notifications for job completion or failure.

The job does the following:

- The

INDEXvariable is calculated using theSLURM_ARRAY_TASK_ID, representing the index of the current job within the array. In our case this will be first 1, then 2 and finally 3. - Next, we build the array structure. The

CURRENT_SAMPLEvariable is created by reading thesample_listfile, sorting it using sort -V (version sorting), and extracting the sample at the calculated index using sed. So for the first index we extract sample1 and store it in the variableCURRENT_SAMPLE. - The porechop command is executed using the

CURRENT_SAMPLEvariable, processing the corresponding FASTQ file. So for the first array, it will trim adapters from sample1.fastq.gz